From legislation to implementation: Exploring how to prototype privacy bills through human-centered policymaking + design

By Anna Chung, Dennis Jen, Jasmine McNealy, Stephanie Nguyen

The rise of data privacy breaches and a look into policies that address these challenges

“If a parent comes in and says, ‘Oh, I want to renew my son’s books,’ I can’t go on their son’s account without their son’s card. We never look at anyone’s reading history. We cannot tell someone what is on an account without a library card or ID. We protect everyone’s privacy that way. That is part of the foundation of using a public library.” —Janet Linder, a lawyer and legal writer, editor, and a children’s librarian in the Boston area.

Informational privacy, having the ability to manage access to information about oneself, has often been controlled by the powerful and designed by the minority, as demonstrated by the dominance of particular platforms and technology organizations. Today, in just 60 seconds, the world produces 4.5 million Google searches, 1.4 million Tinder swipes, and 277 thousand Instagram stories. Platform and organizational dominance, coupled with the massive volume of personal data used by businesses, government agencies, and civil society organizations, make individuals uniquely vulnerable to data collection, manipulation, and insecurity. For context, data breaches in 2019 exposed 4.1 billion records, including banking information, login credentials, and location data.

Members of Congress have responded by proposing several bills, many of which focus on limiting data collection and aggregation through system design features. The “like” button, for example, allows the individual to bookmark information or send a one-click response to a post. The simple tap of a “like” button gives companies data, allowing them to create inferences about a person’s affinities, including political affiliations [1], mood and emotions [2], and possible purchasing behavior [3]. This information can then be used to create groups and subgroups of people, who could ultimately be influenced by tailor-made content. From personal advertisements to the kinds of posts platform users encounter, this content can persuade someone to spend more time on the platform, thereby disclosing more data.

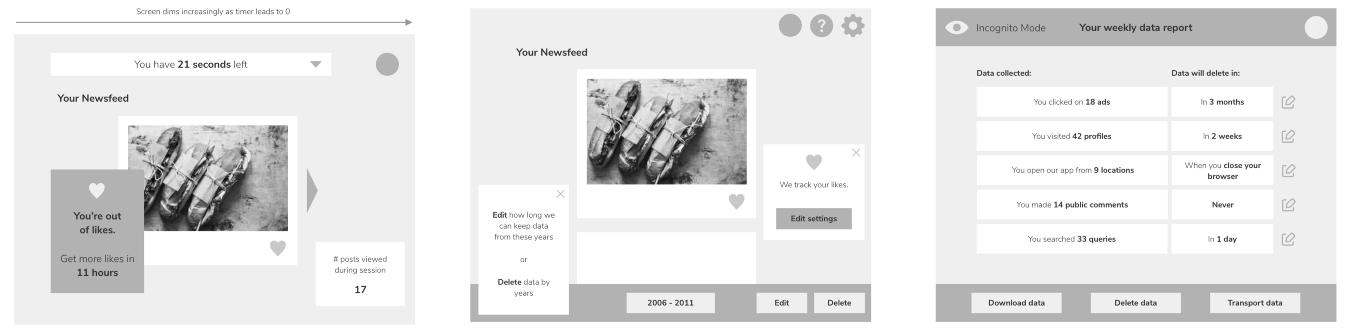

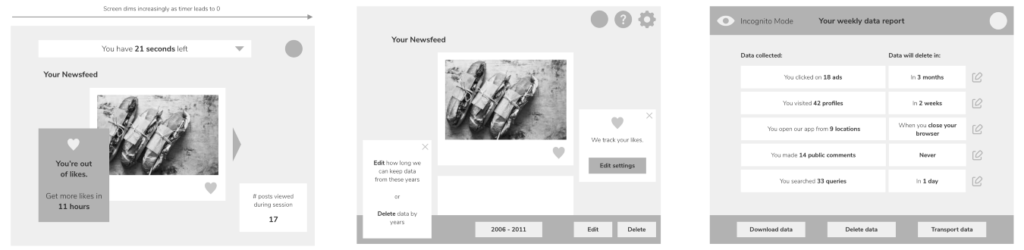

Legislators have released data protection bills through the lens of highlighting potentially misleading design features embedded in these systems. Some policymakers might want consumers to regain control over their online experience by letting them opt out of the filter bubble created through Big Tech algorithms, as the Filter Bubble Transparency Act illustrates. Another bill (The Social Media Addiction Reduction Technology Act) focuses on more specific design elements by enforcing time limits and “neutral presentation” and banning dark patterns, infinite scroll, badges and awards linked to engagement or usage. In a similar vein, The Deceptive Experiences To Online Users Reduction (DETOUR) Act mandates no dark patterns, deceptive tricks, and no A/B testing without disclosure to users. More comprehensively, many privacy bills introduced between 2018-2020 include “right of access, deletion, portability, and transparent notice and consent,” which may also directly impact visual elements in platform interfaces.

While these bills are intended to protect people from harm resulting from data collection and use, some leave big gaps in implementation. For example, how do we ban filter bubbles for platforms that have thousands of filtering mechanisms? What does it look like to design an effective user experience that incorporates the right to data portability? There appears, then, to be a gap between policy and practice that needs to be remedied to make more adequate law. How might we bridge policy principles to implementation to meet the needs of people and communities they were intended for?

These challenges are not new. Several organizations have outlined ways to better bridge the gap between policy creation and implementation. For example, Design Delivery Policy describes the opportunity to better connect policymakers and government service delivery. “By tightly coupling policy and delivery, governments can use data about how people actually experience government services to narrow the implementation gap and help policies get the outcome they intend,” explains Code for America’s former Executive Director Jen Pahlka. Teams at Georgetown’s Beeck Center and Harvard Kennedy School and IDEO CoLab explored user-centered policymaking–creating tools, frameworks and materials to assist policymakers. “It is imperative that policy makers understand their role in implementation, and that implementers be at the table as policy is designed,” the Beeck Center team outlines.

There is no substitute for speaking with these communities who are often left out of decision-making processes. Alex Gaynor, Security Engineer and Chief Information Security Officer at Alloy, mentioned that “user research is necessary to understand what a term like duty of loyalty means in relation to individuals’ expectations” of some of the bill tenets. Advocacy organizations and human rights and civil rights related groups are closely aligned to protect human and consumer rights and would be helpful to gather their perspectives and feedback as well. Policymakers and industry practitioners could also “create easy channels for advocacy and [human] rights groups to provide feedback and publicly respond to such feedback,” explains Sage Cheng, Design Lead at Access Now.

“I think our whole model related to privacy is built on building relationships through trust,” Charyti Reiter, Director of Programs at On The Rise told us. “Particularly for people on the margins, they don’t have the time to manage sharing of information about themselves in ways that people who have access to other means do.”

Human-centered policymaking is needed alongside the processes used both in Congress to create strong policies and in industry to design privacy protecting features in tech. There must be tighter integration to link user needs to tech products and policymaking. Policymakers should build on existing resources to talk to stakeholders who may experience data-related harm and integrate this into their process. With this in mind, we created a Policy Prototyping Guide that provides a roadmap for the roles needed and the step-by-step process that lawmakers can follow to link bills to practice.

What might a process for human-centered policymaking look like?

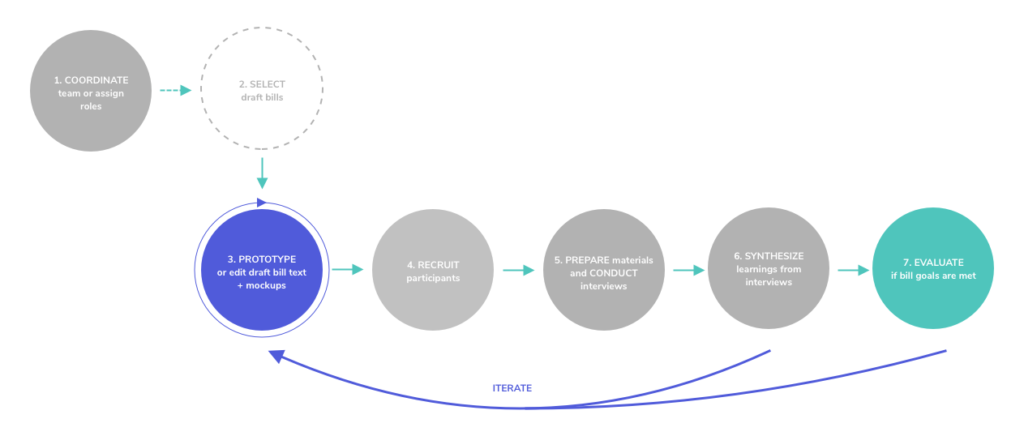

Our Policy Prototyping Guide has 7 steps:

- Coordinate team or assign roles: We recommend having design, technical, policy and product management perspectives. We recognize resource constraints and that certain roles will not naturally fit with your current team configuration. We make recommendations for how to address staffing constraints in our downloadable Policy Prototyping Guide on our research page.

- Select draft bills: Once you’ve assembled the team and roles, pick a policy, establish bill goals (e.g., participants are able to understand concepts without major confusion), establish research questions (e.g., how can “data portability” be effectively understood).

- Prototype or edit draft bill text and mockups: Select and sketch features to prototype, collect preliminary feedback and iterate on prototypes, select a “winner” prototype.

- Recruit participants: Map out the potential audience(s) that may be impacted by the bill and reach out to people for interviews (an email script helps with this). Further expand your network by asking participants for others to interview.

- Prepare materials and conduct interviews: For example, show a text summary of the bill, visuals of bill concepts, and the “winner” prototype. Ask questions based on your interview protocol.

- Synthesize learnings from the interviews: Review the interviews to identify perceived strengths and weaknesses in the bill, prioritize insights, and if more iteration is needed, go back to step 3.

- Evaluate if bill goals are met: Review bill goals and research questions. Share updated prototypes and feedback.

This guide is not one-size-fits-all. It is a framework designed to use policy teams’ existing capacity and resources to execute this work. We outline responsibilities for each role and suggest alternatives, provide an example interview protocol, and detail the steps in our downloadable Policy Prototyping Guide on our research page.

Throughout this process, we spoke with many policymakers. What are the biggest challenges and gaps in creating and implementing policy? Do our recommendations seem feasible? One policymaker we spoke with expressed that to gather public reactions in this way “could be really powerful,” with the caveat that the resources may be limited on Congressional teams. Another policymaker explained some limitations of this approach.

“A lot of the bills deal with reporting, privacy policies, etc. Those are harder (or at least less interesting) for visual prototyping,” they told us. While our research uses visual prototypes, we believe this framework can also be applied to evaluate policies without visual components. Creating concrete scenarios that participants can react to or share potential challenges with is key. For example, the team can ask landlords and tenants to write narratives of how their experiences were changed if a tenants-rights policy were to pass. Interviewees can then respond in specific ways enabling your team to better evaluate the bill.

Our process: How did we create the policy prototyping guide?

To explore the several recent privacy bills relating to design, we formed a team with diverse experiences in law, design, engineering, human-computer interaction research, and government policy. Such diverse perspectives on the team forced us to clarify language and meaning, reach out to diverse audiences, and reconsider our own processes in how we derive insights from findings.

We grounded our research in three US Federal draft policies: The Social Media Addiction Reduction Technology (SMART) Act, The Online Privacy Act (OPA), and The Consumer Online Privacy Rights Act (COPRA). For each policy, we created a mockup to show how a social media platform might look in a generic social media platform. We chose to use social media as a reference point because it’s a common technology that people with varying levels of technical expertise use, and where personal data can easily be disclosed.

With prototypes in hand, we interviewed 41 people from different industries and backgrounds, including: lawyers, industry practitioners, and government employees. We asked them about how they think about privacy in their fields, what they think the strengths and challenges of the privacy bills and prototypes are, and what their perceptions of the proposed policies are at modifying social media design.

One recommendation for policymakers, for example, is to talk to stakeholders who experience data-related harm and to integrate their perspectives into the policymaking process. We spoke with physicians about the intersection of patient trust, privacy and physical and mental health. “The apps and websites young people use don’t always put their best interests first,” Maria, a pediatrician in Boston told us. “Because of their developmental age, children and especially adolescents are prone to make mistakes on social media that could negatively impact their future job prospects, social life and mental health.”

For design practitioners and technology organizations, a recommendation is to recognize that individuals want to be empowered to control what can be done with data, without overburdening or diminishing the platform experience. Organizations should, for example, provide granular controls for the kinds of information an individual chooses to share, practice data minimization, or not collect data at all without allowing individuals to decide whether or not they wish to participate in the data use scheme.

“We’re now at a point where consumers want greater access and control and uptake of managing their data. But the real challenge is [that they] still don’t know [data is] different […] and how it can be leveraged or how it shouldn’t be leveraged to make appropriate decisions.”

Devin Gladden, Manager of Federal Affairs at the American Automobile Association (AAA)

Check out our reports for a full list of recommendations.

Moving human-centered policy prototyping forward

Getting feedback from the community in ways that include visualizing bills in concrete, relatable interfaces will enable policymakers to draw out unanticipated challenges that a bill would have on their digital experiences. Policymakers can also better clarify confusing, abstract concepts littered throughout policy by talking directly to people who may be impacted by these policies. Our hope is that by putting this process out in the open, we’ve created a toolkit that improves the efficacy of policy after each iteration and that the process itself can evolve when teams make it their own and shape it for their needs.

There is still work to do. This research can be improved in many ways, and we aim to continuously improve our frameworks and protocol with feedback. We, therefore, ask the following questions:

- When and how might this toolkit be most effective? How does it fall short? How can we improve it?

- What existing human-centered policymaking efforts can we collaborate and partner with to strengthen the outcomes for this work?

- How might a process like this be better institutionalized in policymaking environments?

- How can we make this process more useful for community groups and civil society organizations?

We will be hosting a series of follow-up events and sessions to discuss the research in more detail. Please join us for an upcoming webinar hosted by University of Florida, Center for Public Interest Communications/Consortium on Trust in Media & Technology called “Policy Prototyping as cultivating trust through political engagement.” Click here to RSVP for this and any upcoming events organized by the team.

For more information, visit the Let’s Talk Privacy website which goes into great detail about our work and check out our research page to download our reports and Policy Prototyping Guide. We welcome your thoughts and would like to hear from you. Leave us a note at letstalkprivacy@media.mit.edu.

——–

Cited sources:

[1] Kristensen, J. B., Albrechtsen, T., Dahl-Nielsen, E., Jensen, M., Skovrind, M., & Bornakke, T. (2017). Parsimonious data: How a single Facebook like predicts voting behavior in multiparty systems. PloS one, 12(9).

[2] Bazarova, N. N., Choi, Y. H., Schwanda Sosik, V., Cosley, D., & Whitlock, J. (2015, February). Social sharing of emotions on Facebook: Channel differences, satisfaction, and replies. In Proceedings of the 18th ACM conference on computer supported cooperative work & social computing (pp. 154-164).

[3] Zhang, Y. & Pennacchiotti M. (2013). Predicting purchase behaviors from social media. In Proceedings of the 22nd international conference on World Wide Web (WWW ’13). Association for Computing Machinery, 1521–1532. DOI:https://doi.org/10.1145/2488388.2488521