Are social computing and data science just tools for the powerful, or can they be used to question power and reshape the structures that influence us? It’s a question I’ve been wondering as I’ve watched civic tech & academic communities idolize the employees and “alums” of big corporations and governments– partly because of the resources they have, and partly because it seems like these companies are the sole gatekeepers of social experiments and large-scale interventions to influence society.

A few weeks ago at the Berkman Cooperation Working Group that I co-facilitate, Berkeley PhD student Stuart Geiger argued that data science and social technology design *can* be used to critique and reshape power. In his talk, Stuart described these “Successor Systems,” software that questions systems with alternative knowledge and also supports cooperation for change. Stuart also told us about his own work to design these systems and how they connect with ideas from feminist science and technology studies.

In his PhD, Stuart researches the role that bots and other automated systems play in governing mediated communities, from Wikipedia and Reddit to bitcoin. His work on the idea of Successor Systems is related and comes out of a collaboration with Aaron Halfaker at the Wikimedia foundation. When he spoke to the Cooperation Working Group, we were just back from Pittsburgh, where we had given a talk contrasting civic values and output values in human computation and crowdsourcing at the Citizen-X workshop at HCOMP (I’ll blog about it soon!). Here’s the liveblog:

Moving Beyond Algorithmic Transparency

Stuart starts by talking about the limitations of algorithmic transparency, a topic I have blogged before. In critical algorithm studies, Humanities scholars and social scientists tend to treat algorithms as black boxes that purport to be objective. Researchers call into question this argument of objectivity, highlighting instead the material and social processes connected to history and power that constitute algorithms. More recently however, Tarleton Gillespie has argued that we need to go beyond opening up the black box: “A sociological inquiry into algorithms should aspire to reveal the complex workings of this knowledge machine, both the process by which the algorithm chooses information for users and the social process by which it is made into a legitimate system” (Gillespie 2014). According Gillespie, we can open up two parts of an algorithm: firstly, the way that the algorithmic code works, and secondly, the way that code is embedded into a larger social landscape.

Stuart’s work on successor systems attempts to bridge these two areas, seeing algorithms as information processing agents whose code is embedded in a social landscape. To bridge these areas, we need to look to feminist science and technology studies, Stuart tells us. He calls the idea of successor systems “a sensitizing concept, calling our attention to how computational systems, as algorithmic systems, are systems of knowledge production.” Stuart argues that since technologies are systems of knowledge production, we can connect our thinking on algorithms to thinking in STS in the 1980s, especially Sandra Harding’s “The Science Question in Feminism” (pdf) and Donna Haraway’s 1988 work “Situated Knowledges: The Science Question in Feminism and the Privilege of the Partial Perspective” (JSTOR). Harding and Haraway were primarily concerned with how knowledge produced from certain standpoints became totalizing, and seen as if it was produced from no standpoint at all– the same problem that Gillespie and others identify with algorithms.

Creating a Better Account of the World

Calling out “the god’s eye trick” as subjective is not enough, Stuart tells us. Responding to the postmodern critiques of science which were prevalent in the 1980s, Harding and Haraway argue very strongly against just deconstructing science as merely subjective. Instead, they argue that to critique totalization and to counter the god’s eye trick, objectivity needs to be reclaimed and blended with subjectivity: “Feminists have to insist on a better account of the world; it is not enough to show radical historical contingency and modes of construction for everything” (Haraway 1988: 579). Instead of simply pitting critique against the construction of “objective” knowledge, Haraway argues that “feminists have stakes in a successor science project that offers a more adequate, richer, better account of a world, in order to live it well.” This idea of a successor science focuses on the positionality of knowledge, the positionality of claims that people make, and the relation they have to socio-technical infrastructures of knowledge production. As designers, that involves being reflexive on the standpoints of the systems that are enabled by technology.

That’s why standpoint epistemology is an important part of design that critiques and creates, Stuart tells us. Standpoint epistemology takes into account “the ways in which specific social and material conditions provide partial perspectives, from which certain claims to knowledge can and cannot be made.” At this point, Stuart shares a series of scholars working in standpoint epistemology whose work has been silently influential to inspire his own design activities:

- Scripts (Madeleine Akrich)

- Situated action (Suchman)

- Technophenomenology (Feenberg)

- Telepisemology (Goldberg)

- Critical technical practice (Agre)

- Agential realism (Barad)

Examples of Successor Systems

Stuart starts by talking about the Turkopticon by Lilly Irani and Six Silberman, which is designed to critique the inequalities built into Amazon’s Mechanical Turk system. Mechanical Turk includes a massive surveillance system that allows employers to track the behaviour of workers, supporting them to be better and more efficient employers. In contrast, workers have no ability to rate their employers back. Workers don’t have an ability to talk to each other, separated from each other by virtual walls. Mechanical Turk doesn’t offer people a space to articulate and understand what it means to be a worker in this space. The creators of Turkopticon created a browser script that supported workers to rate employers– building new knowledge into the Turk system based on the priorities of workers rather than employers or Amazon. Users of Turkopticon get to see how other Turkers have rated an employer before they accept a job. At HCOMP last week, Kristy Milland, community manager of TurkerNation.com, noted that employers are now monitoring their Turkopticon ratings and changing their behaviour in response. In the case of Turkopticon, the successor system is critiquing Mechanical Turk and generating new subjective knowledge that come from the perspective of workers.

Recently, Lilly and Six have written about the experience of people starting to rely on their system, which was initially designed just to critique Mechanical Turk.

Next, Stuart tells us about Hollaback! by Jill Dimond, where the system critiqued is the “systematic, institutional ignorance of street harassment across societies.” The goal of Hollaback is to support people to reclaim the narratives, raising consciousness and supporting organizing. In the system, people submit stories about harassment, which are mapped. Unlike crime maps, Hollaback doesn’t set out to warn people about certain neighborhoods. Instead, it’s designed to support the realization that this is a society-wide issue. There are immense stakes in who gets to decide how to represent street harassment. The goal is not to fix a long-term social problem once and for all with technology, but instead to use technology and crowdsourced systems to articulate critique, giving people space to produce knowledge about the problem and organize around it.

Stuart tells us that Hollaback and Turkopticon share the following commonalities:

- an existing, often dominant system of knowledge production, not necessarily software-based

- standpoints or kinds of accounts that this existing system does not sufficiently incorporate

- there is a project to build better accounts of the world

(read more about Hollaback in my AcaWiki entry on Jill’s Hollaback! research)

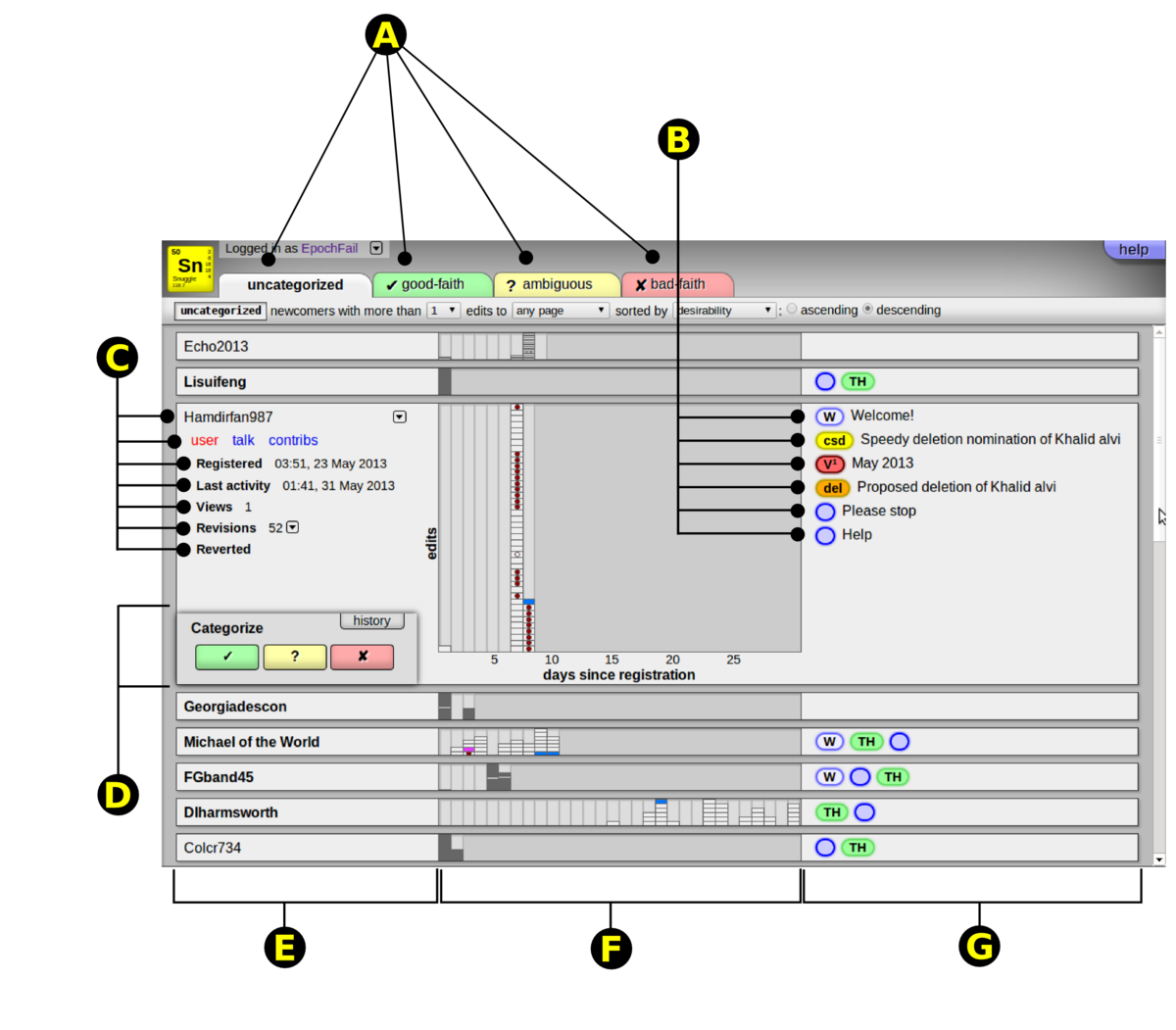

“Snuggle UI” by EpochFail – Own work. Licensed under CC BY-SA 3.0 via Wikimedia Commons.

Stuart next tells us about the Snuggle system. When Stuart and Aaron Halfaker looked at Wikipedia, they observed a situation where Wikipedians treated newcomers as potential vandals who were trying reduce the quality of Wikipedia. This attitude sometimes gets built into Wikipedia’s technologies. Huggle is one example, an automated tool to find bad editors to Wikipedia, remove damaging edits, and deter people from making bad edits. In the Huggle system, users see a side-by-side view of an edit that’s been changed. If you click a button, an edit is removed from Wikipedia and the user is sent a nasty message. If they receive enough nasty messages, then their account will be blocked. It’s a policing model of supporting Wikipedia, keeping the encyclopedia safe from people who want to cause damage to it. While there is a clear need to keep Wikipedia safe from vandalism, these nasty messages also tend to turn newcomers away.

Stuart and Aaron took on the motto “Snuggle, don’t Huggle.” Their system used an interface similar to Huggle to offer more supportive feedback to newcomers. Stuart tells us about the design changes they made between Snuggle and Huggle. Firstly, when users start using Snuggle, they see edits made by other people in the tool, including new users as a part of a networked public where you can see other people’s supportive actions, rather than a lone defender keeping the vandals at bay. Secondly, editors are reviewed not by their individual edits, but as across their edits as a whole. Thirdly, when editors review a user, they are able to indicate levels of ambiguity, opening spaces for people to contest and debate how an individual is rated and what the appropriate action is (read the Snuggle paper here – PDF).

What Do Successor Systems Achieve?

How do you know when a successor system is successful? The Snuggle problem didn’t solve all of Wikipedia’s problems forever. However, the Snuggle system *did* make visible the assumptions built into Wikipedia’s systems– showing that newcomers could be taught rather than a vandal to be fought. Secondly, the perspective of all newcomers as suspicious became just one perspective among several, rather than the natural, default choice (Perry Hewitt has recently blogged about the need for awareness of default choices). Thirdly, a reflexive public assembles in the production of more situated knowledges– Turkers met each other, people experiencing street harassment discovered they weren’t alone, and Wikipedians discussed and debated the meaning of mentorship and support on Wikipedia.

Why Build Successor Systems?

I asked Stuart why build a successor system rather than just write a paper that identifies problems? Halfaker and Geiger thought that they could hand the Wikipedia community a PDF and say “here are all your problems.” They thought that people would go out and make changes based on the findings in their research. People have been talking for years about creating an alternative to Huggle, and it didn’t happen. So they created Snuggle to bring people together to work on the issue.

Doing Data Science & Technology Design for Change Outside Powerful Corporations

Stuart and Aaron’s idea of successor system provides language to explain the work I’ve been doing for several years now to design technologies that highlight social problems and then structure cooperative responses to those problems. Jill Dimond’s work on Hollaback!, Lilly Irani and Six Silberman’s work work on Turkopticon, and Stuart/Aaron’s work on Snuggle all show ways that we can use code to critique and restructure the forces that shape our lives, even if we don’t hold the keys to Facebook, Google, Microsoft, or Twitter’s core technologies. I am deeply grateful to them for their effort to describe this approach and carry it out themselves so well.

Update Dec 12: this post had incorrectly attributed a quote to a Mechanical Turk administrator, when the quote was in fact from the community manager of TurkerNation.com. This has been corrected.