This is a liveblog of the town hall discussion “CSCW Research Ethics Town Hall: Working Towards Community Norms” at CSCW 2017.

Panelists from the SIGCHI Ethics Committee

- Amy Bruckman, Georgia Institute of Technology

- Barry Brown, University of Stockholm

- Casey Fiesler, CU Boulder

- Jeff Hancock, Stanford

- Cosmin Munteanu, University of Toronto

- Bob Kraut, CMU

The SIGCHI Ethics Committee is charged with helping facilitate community conversations—helping social norms emerge from the community. This should help refine ACM’s policies and procedures. The Committee does not make decisions about what is ethical. Bruckman also sits on ACM COPE which works on ACM’s Code of Conduct (which was written before the Web existed).

Bruckman and Feisler start the town hall off by reflecting on how traditional human subjects rules were written for medical research. This is often a poor fit for social science, internet environment, methods that involve stakeholders as full partners, and interventions in public places.

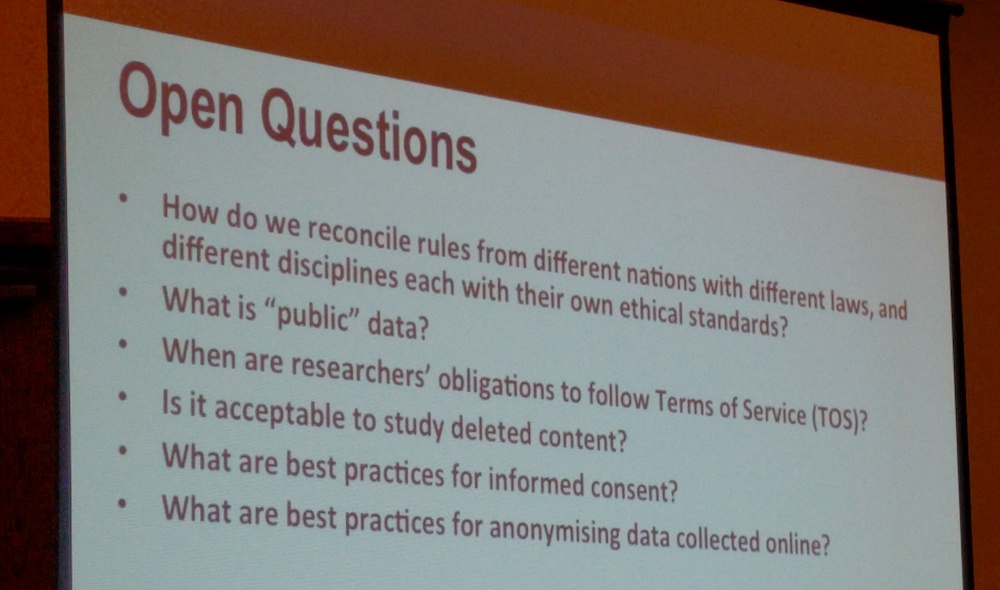

Common open questions in research ethics across computer science communities include how we develop ethical standards that work cross-nationally and cross-disciplinarily. Serious issues involve data privacy and legality with respect to “public” data and platform terms of service, and how to secure truly informed consent. The following are the questions and comments discussed during the town hall.

How do we protect research subjects, especially from marginalized groups, that can be discovered through reverse-lookup of social media platforms?

- Bruckman brings up the case of Bob Kraut’s study quoting directly from breast cancer forum posts that anyone could join the forum and find the personal contact information of subjects.

- Kraut notes that research ethics in medical studies talk about protecting reasonable expectations of privacy but not unreasonable expectations of privacy. And these are often baked into warnings on such forums. He does attempt to massage the language somewhat so it’s not very easy to find but there are limits to that.

- This brings up ethical questions about the research and authenticity of the data. Bruckman tells a story about being Sherry Turkle’s research assistant on Life on the Screen in which she changed the medical condition a child was suffering from which changed the severity of his condition and perhaps other’s interpretation of his behavior but it helped protect his privacy. There is a trade-off and it needs to be considered.

- A key question is whether doing the research elevate the publicity of research subjects and putting them in harm’s way when that wouldn’t have otherwise occurred even in public spaces.

- Thinking to the future: we also can’t anticipate all the new ways research advances will allow anyone to be identified based on what scant trace data is revealed.

- From the disciplinary perspective of media studies, there is a tradition of assuming that any data you can get that was published—microfiche, etc.—was fair game for research and there isn’t a reasonable risk. But is this no longer true: does public still mean public?

What is our responsibility to identify reviewers of papers that represent the marginalized communities in a part—how essentiallizing or insulting is that to the marginalized population?

- Bruckman notes that postmodern ethnography (Ronaldo, Culture and Truth) argues that we are ethically required to report back to our populations and let them respond to them.

- One research project mentioned by an audience member discussed a study of ageism among bloggers and they made sure to return to the research subjects and ask them whether they would want to change any of the quotes about them and be clear about what the implications would be for them and for the research.

- Hancock brings up the case of CSCW paper reviews. He wants to see more diverse review panels (and implicitly a more diverse community of scholars) to help shepherd papers that look at particular populations.

- What do you do if people want to change the representation in the research?

- Kraut expands on Hancock’s note that too often the feedback comes post-hoc at reviewing stage and should also be a part the research project design stage. This isn’t just about ethical research but it also stops good research at the review stage because a reviewer is sensitive to a particular issue that has already based ethical review earlier.

- Bruckman responds to Kraut by noting that Institute Review Board’s are often asleep at the wheel. How do we deal with getting through IRB, yet the true ethical quality of the research design is still murky.

- Hancock says that we have a responsibility to review the IRB.

- Munteanu puts this in the perspective IRB and research ethics around the world: where sometimes we as researchers have a choice of how strict of an ethical policy we want to follow: European privacy laws, Canadian detailed research ethics, U.S.’s looser strictures?

How do we handle visual data about research subjects, especially marginalized groups—sometimes we can synthesize approximations of that visual data to show what we mean but not submitting them to harmful publicity?

IRB research ethics is all about protecting the people that are studied. Journalistic ethics differ by protecting the people’s right to know. Corporate ethics include an obligation to their stakeholders. In doing corporate research as an employee or contractor, then you are responsible to your employer. This complicates the research ethics question in the space of CSCW and we need to be aware that IRB is only one frame.

- Should we talk about both positive and negative research ethics? Negative ethics required by IRB upfront to handle harms is different than the positive ethics of doing something that helps people. In public health research, there is often a need to approve studies quickly to help as many people as possible. But when these include methodologies like ethnography of stories of struggle can be hard to get through an IRB because the focus is on protecting subjects rather than on the positive outcomes from it.

- Brown notes that we can have excellence in ethics that are divorced from specific regulations.

- Munteanu notes that Canadian research ethics put duty to science as the top responsibility with a respect for subjects (not a do no harm principle).

Blogs written by parents of autistic children is an example of a sensitive dataset where clustering quotes creates serious ramifications for the population because it falls under medical definitions of autism that can change the medical treatment this people receive.

- Hancock cites Solon Barocas: we can computationally infer things about a population that they did not give consent to collect information about.

What do we do about the misrepresentations of our work in the public?

- Are there examples of CSCW proceedings articles that have been misrepresented?

- See John Vines’s study on HCI papers misrepresentation in the media.

- One study author included in John Vines piece talks about his project in which liberal and conservative press framed research about what people threw away in the trash using a camera inside as solutionist surveillance to solve bad garbage behaviors, which was not the point of the paper. He says the problem is that really you can’t control the public.

What should happen when the paper is sent to the reviewers, what should we do?

- Peter Kraft mentions his research using bots as virtual confederates in online field research in which they pose as humans, which may be deceptive (against the ACM Code of Conduct), but is also an important emerging area in CSCW.

- Different communities have different ethics around deception. The Communication discipline widely uses deception but also has clear processes for how to handle it, especially for post-study debriefs. There are real research cases that require deception and we need to think carefully about that.

- Hancock notes that IRB does have a lot of experience in deception research.

- Of course, industry cannot rely on IRB for addressing deception practices.

- Another audience member notes that debriefing in online communities is really hard. We need best practices for how to do post-deception debrief well in these cases?

- Munteanu says in Canadian research ethics deception and research value can be balanced against harm or respect for subjects.

Some of the papers submitted to CSCW have never gone under ethical review: what should the program committee do about these papers?

- In a similar case, a cross-institutional research study had received positive IRB reviews from several institutions, but do we feel like we have the authority as a program committee to overrule ethical reviews of institutions if we disagree that they should have been given.

- Cliff Lampe worries that we might be performing a kind of cultural hegemony if we as a community start overruling other communities and their ethical review boards.

- Example of SIGCOM paper about Friendster: program committee choose to append a prominent box above the title on the paper that said they had ethical concerns about the paper even though they published it. This was a kind of Scarlet Letter approach.

- Ideally, these things should happen in public rather than in private program committee rooms.

- A dangerous line of thinking is that the “harm has already been done” by the research, so what additional harm do we do by publishing?

- Another consideration for researchers about online research is that we are crossing many localities when do our work. We don’t know how to think about this. Do we have an absolute standard as a community? Which locales should take precedence? Should it be the strictest ones?

- Munteanu provides the example of a research study in which he was grilled by an expert ethics panel for hours to get to a multi-page decision. If he had received the Scarlet Letter on that paper it would suggest that the program committee’s opinion was better than the panel of ethics experts, which doesn’t seem right.

Why can’t we make room for ethical consideration sections within papers or otherwise publish the outcomes of ethical reviews?

- Fiesler argues that we should make room for them and they should be part of the methods sections, and we need to work through the logistics of paper length that suggests we can’t.

- Mako Hill notes that when he submits papers to general science venues they do require a section on ethics. He doesn’t see why we in CSCW can’t do this as well? We can start in places that don’t have strict page limits and start encouraging people and in some cases requiring them?

- Kraut discusses the analog issue of replicability of research. He does a lot of work with Facebook data that other people can’t get. Replicability should be part of the ethical considerations. And some scientific agencies require data to be released so that science can benefit more generally. This presents a conundrum?

- Bruckman extends this with a question: What is the cost of platforms controlling what is said about them?

- Should funding bodies make that requirement rather than publication venues and research communities? In one audience member’s experience, he has found that the answer is ‘no’ arguing that Facebook should bear the cost with respect to the value to science. Descriptive statistics can sometimes enable people to create synthetic datasets—there is more nuance to this question of public data.

- Hancock replies that public and private data really exist on a spectrum. For instance, archaeologists only allow trained experts to handle artifacts. We could come up with processes that allow for limited publicity to handle some of these issues.

What about ethical review of software creation that is an intervention, not just research? What should ACM’s Code of Conduct say about the ethics of software development?

- Bruckman notes that it was in the old Code of Conduct and it is improved in the new version. This is the time to send feedback on revision for this year to ACM COPE: http://ethics.acm.org/code-of-ethics/code-2018/.

- The new code will be finished in the next fourteen months and there will be lots of opportunities for public input. Researchers are only a part of the Code. And IEEE has similar reviews ongoing.