Social media influences our daily lives, but we have little influence on how social media platforms work. We’re tired of algorithms that don’t really understand what we want to see. We’re concerned about how content on these platforms is being moderated. And we’re frustrated with our lack of control over these communities. If you could change how social media works, what would you want to see?

Gobo is our playground for answering this question. Gobo is an experimental tool that gives you control and transparency over what you see on your social media feeds. It lets you set your own “rules”, so you can decide how your social media feeds work. We’re excited to share how Gobo has evolved over the past year, and how that evolution supports a larger vision for the future of social media.

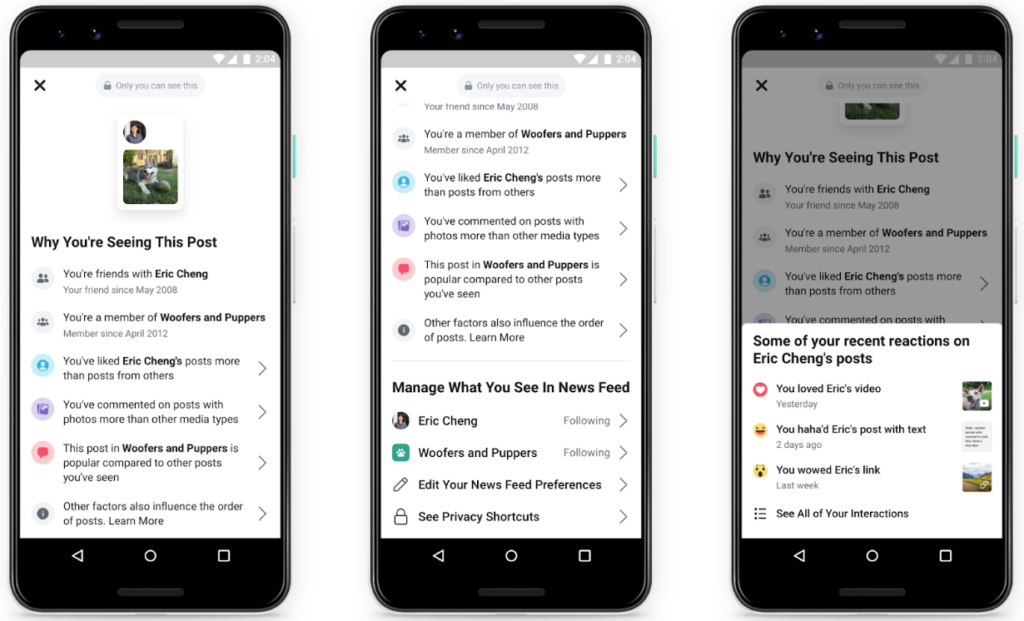

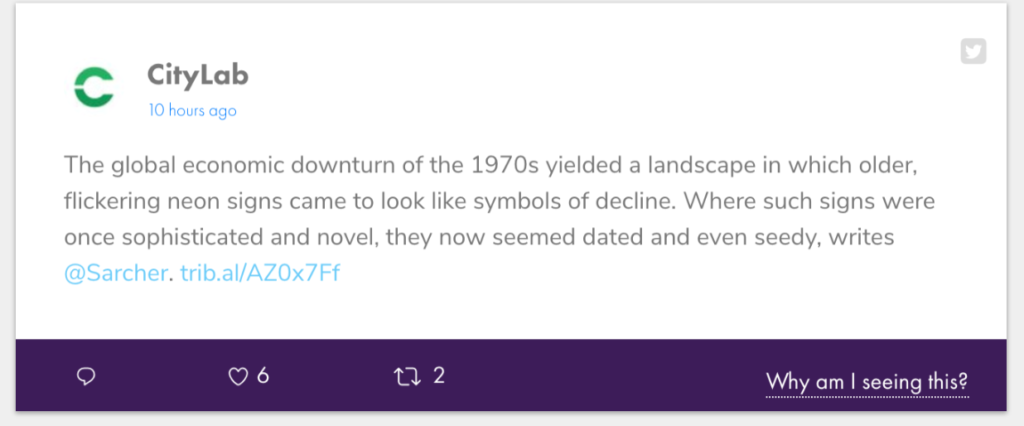

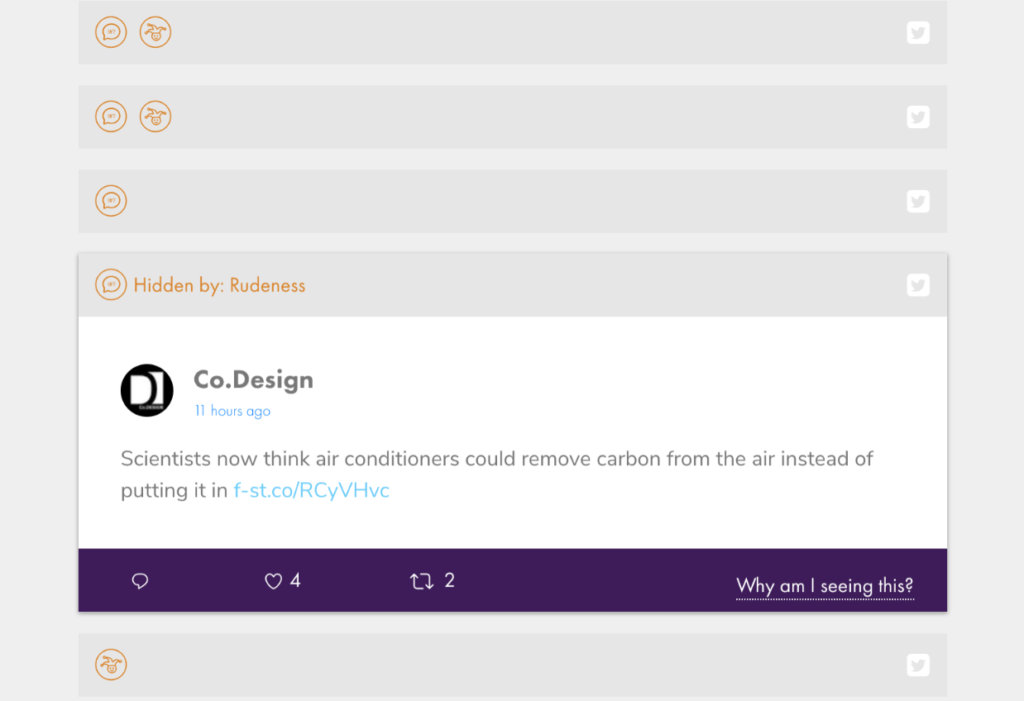

On March 31, 2019, Facebook announced a new feature called “Why am I seeing this post?” The feature is described on their Newsroom blog as a way “to help you better understand and more easily control what you see from friends, Pages and Groups in your News Feed.” It mimics a core feature of Gobo called “Why am I seeing this?” that we introduced when we launched Gobo in 2017. While it’s unclear if Gobo inspired this change, it’s been a critical part of our mission for transparency on social media.

But increased transparency is just the start. While we have the right to know why we’re seeing content in our feeds, don’t we also have the right to control those feeds and ensure social media isn’t limiting our picture of the world?

In the aftermath of Cambridge Analytica and the 2016 US elections, we’re seeing major platforms like Facebook starting to address collective concerns over how social media platforms can be used to amplify, influence, and manipulate. We’re also seeing efforts to hold these platforms more accountable and to make these platforms less polarized. There’s a lot to figure out, but we believe the conversation needs to move beyond rethinking these platforms. We need to start rethinking how social media works.

Envisioning an Alternative Future for Social Media

We started Gobo in 2017 as a technology-to-think-with. We value the importance of criticism, and at the same time, we want to transform this criticism into a working tool that can demonstrate the future of social media that we want to see. For the past year, we’ve been rethinking some of our goals, implementing new features, and creating a new visual identity.

Ethan Zuckerman, our director for the Center of Civic Media, articulates some of our current visions for social media with four P’s: personal control (allowing you to decide how your feeds work), plural in purpose (having a diversity of platforms for different uses), public in spirit (fostering dialogue between different groups), and participatory in governance (the community that uses the tool determines the guidelines of a platform). With Gobo, we’re arguing for social media that prioritizes personal control and is plural in purpose.

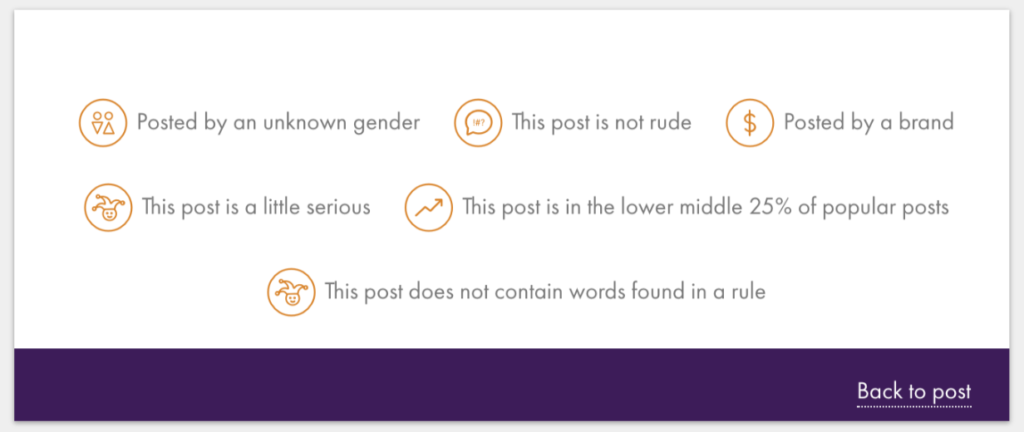

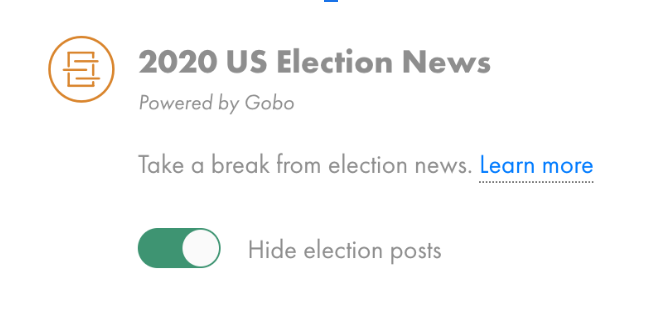

Towards Personal Control

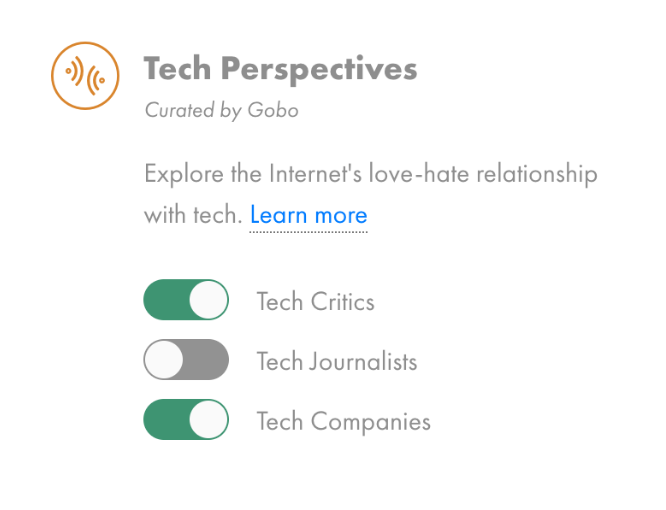

Gobo connects to your social media accounts and lets you decide which posts show up and which are hidden. Instead of letting a large company’s unknown algorithms construct how you see the world online, Gobo allows you to control your feeds with a set of “rules.” We initially launched Gobo with six rules: “Politics”, “Seriousness”, “Rudeness”, “Gender”, “Brands”, and “Obscurity.” Since then, we’ve added two more: “2020 US Election News” and “Tech Perspectives.” “2020 US Election News” hides posts based on keywords – in this case, posts that mention candidates running in 2020. This rule was designed on the idea that sometimes we need a break from certain topics. News cycles can be overwhelming, and viral trends can be downright annoying. We’re working on rolling out a customizable version of this rule soon, so you can create a more specific rule that works for you.

In addition, we also added “Tech Perspectives,” which brings in posts written by folks with different perspectives on the tech industry. Just like how political news has its biases, technology news has its own set of biases based on people’s roles within the industry. With this rule, you can explore perspectives from critics, journalists, and the companies themselves.

You might have noticed that we also reframed the language – we went from “filters” to “rules.” This shift happened because we wanted to emphasize not only having control over removing content from your feed but also having control over adding content (and down the line, flagging certain kinds of content). We think “rules” are a helpful term for encompassing all of the different types of control we could have on social media. These rules are software plug-ins for our open-source platform – they are, and can be, written by anyone.

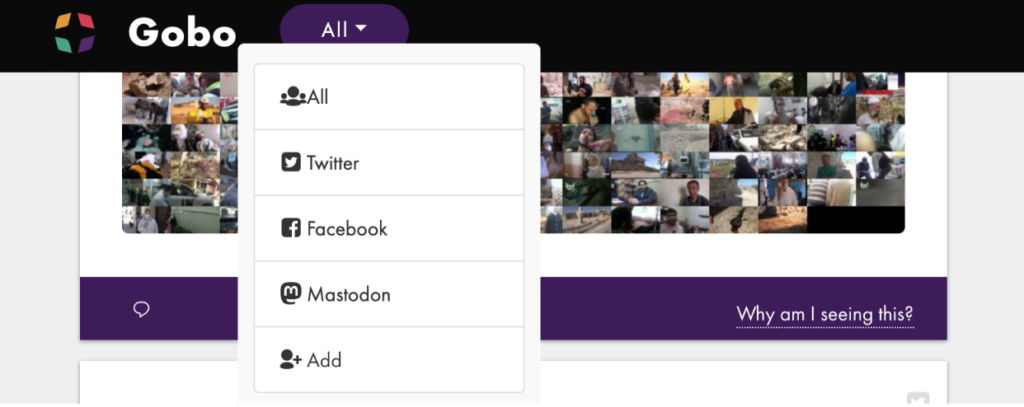

Towards Plurality

If the biggest platforms only get bigger, they continue to gain disproportionate power over what we see on our feeds and how we can interact with them. Instead, we believe that many smaller social media platforms should exist to serve different purposes. With Gobo, we want to demonstrate a future where you can easily navigate between multiple networks. That’s why we’ve made Gobo compatible with three platforms: Twitter, Facebook, and most recently, Mastodon. Now, you can view content from all of these platforms in a single feed, or view content from each platform separately.

However, the process for integrating multiple platforms is all but straightforward when each one has its own API restrictions. Open source platforms like Mastodon are highly compatible with Gobo, while platforms like Facebook heavily guard their data. On Gobo, we can only access public page content from your Facebook feed, which means we can’t access any content posted by your Facebook friends. Even getting this extremely limited API access had us going through multiple hoops and hurdles. With other platforms like Instagram and LinkedIn, we’re facing similar API restrictions. With these barriers of access, it’s not only a matter of whether we want to have greater control over our social media feeds but whether we have that right in the first place. We will continue working to include additional platforms into Gobo, but we are realizing that this will be a policy battle and a technical effort, as many platforms are designed to prevent you from using a tool like Gobo.

While user privacy is a very real concern, the restricted access to these platforms ultimately makes it more difficult to study them and hold them accountable. It also allows them to further consolidate power, as our data becomes a commodity that only big advertising firms can access. With Gobo, we’re hoping to demonstrate the potential for interoperability between social media platforms. Ultimately, we want this to allow for more highly customizable tools that we can use to reclaim agency over our social media.

Towards Algorithmic Literacy

During our redesign for Gobo’s interface, we especially wanted to focus on increasing transparency as a way to talk about how our algorithms work and where they come from. One of our major updates is showing you exactly where your posts are getting hidden based on the rules you set. Whereas before we removed the posts from your feed entirely, we now collapse the post in your feed. Not only does this more clearly visualize how your feed is being impacted by the rules’ algorithms, it also extends a stronger invitation for exploring why certain posts were hidden.

We’re continuing to experiment with ways that Gobo can serve as a tool for promoting algorithmic literacy. In the spring of 2018, we created an exhibit for the Tech Museum (with support from Mozilla) that lets people play with creating their own feed and comparing it to a standard one. Now, with our friends at Berkman Klein, we’re brainstorming a physical game based on Gobo that can engage youth in discussions and reflections about how algorithms influence what we see.

We’re building Gobo as both a tool for managing your social media clutter and a prompt for sparking conversations on alternative futures for social media. Try it out at gobo.social, and let us know what you think at gobo@media.mit.edu!