Joy Buolamwini, a doctoral student at the Center for Civic Media at the MIT Media Lab, and Deborah Raji, an undergraduate at the University of Toronto, released an important piece of research on January 25, an analysis of commercial facial analysis tools that indicated that most of these tools perform better on white male faces than they do on black female faces. Specifically, Buolamwini and Raji tested to see whether machine learning models could identify faces as male or female from a test set of images.

The research, which built on an earlier paper by Buolamwini and Dr. Timnit Gebru, won the best student paper award at the AAAI/ACM conference on Artificial Intelligence, Ethics and Society. It’s also led to some pushback from Amazon, whose Rekognition gender classification feature was one of those Buolamwini and Raji evaluated. Dr. Matt Wood, the general manager of artificial intelligence at Amazon Web Services, offered several objections to the MIT research, declaring “this research paper and article are misleading and draw false conclusions.”

Wood’s objections include the concern that Buolamwini and Raji, and those who write about their work, are not drawing a distinction between facial analysis (detecting attributes or components of a face) and facial recognition (identifying a face as belonging to a set of known faces). Additionally, he points out that Amazon has recently changed its machine learning models and that the researchers were testing an earlier tool. In Amazon’s own internal results, Wood explains, the system found no differences in its capabilities of identifying gender accurately between different ethnic groups.

Buolamwini has responded to Wood’s critique on her own blog, and I’m not going to get into the technical detail she offers—I strongly recommend reading both his and her posts. But as Buolamwini’s doctoral supervisor and as a supporter of her work, I do feel compelled to step in and talk about the strange situations that arise when there’s a research dispute between a student researcher and one of America’s largest and wealthiest tech companies.

Wood is correct that the paper evaluates an earlier version of Amazon’s machine learning model—Buolamwini and Raji conducted the tests in August 2018, and Amazon updated the model in November 2018. Anyone who’s ever encountered academic publishing knows that it takes a while to get a paper published, and the researchers already plan to test Amazon’s new model. But Buolamwini points out that the Amazon model she tested was marketed to customers, including to law enforcement officials—if the model has systemic biases, it’s very much a matter of public interest even if the new model is an improvement.

Wood may well be right that the new machine learning model performs well on the tests his team has run on it. That’s a challenge with testing machine learning—models can perform very differently on different data sets, especially on data sets they’ve seen in their training corpus. It’s absolutely possible that Amazon’s model could perform well on their internal tests and poorly on Buolamwini and Raji’s tests without any malpractice on either side. Buolamwini has been advocating for facial recognition systems to test against a common standard developed by NIST, the National Institute for Standards and Technology. Amazon has not been willing to make a version of their system available for NIST testing, while several other large corporations have, citing the size of their database and training sets.

What concerns me more is Wood’s challenge that facial recognition and facial analysis shouldn’t be conflated. He’s right, of course; the tests for those two tasks are quite different. But Buolamwini and Raji don’t conflate the two tests. Instead, they suggest that poor performance on facial analysis may suggest deficiencies in training and testing sets. Buolamwini’s research has shown that several machine learning models underperform on the task of correctly identifying the gender of black women, likely because the training or testing data included many more white male faces than dark female faces. This test of gender identification doesn’t guarantee that a model that fails to correctly classify darker female faces will fail to identify them in a facial recognition test. But it does raise concerns that the training and testing data could have systemic biases and that the providers of these models should carefully consider whether they’re training their systems on the full spectrum of diverse human faces.

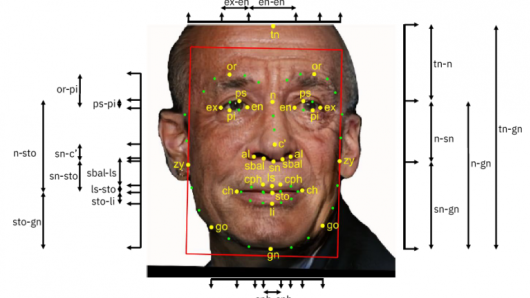

Buolamwini’s research is uncomfortable for the companies she’s identified as having imperfect results. It’s gratifying that IBM and Microsoft, both identified by Buolamwini in previous research as showing bias in their models, have responded by learning from her critiques, improving their models and working to solve the larger problem of algorithmic bias. IBM has released a dataset of one million faces with detailed face annotation to help the research community better understand fairness in facial analysis technology; Microsoft has called for government regulation in the space over concerns of bias and warned its investors that biased algorithms could harm the company’s reputation. It’s deeply disappointing that Amazon’s response would be to dismiss this research as misleading and suggest that the authors are drawing false conclusions.

In response to criticisms made through peer-reviewed research, IBM and Microsoft have considered limitations in their software, made substantial improvements, and engaged more broadly in debate about the ethics of artificial intelligence. One of the goals of scholarly research is to fuel healthy debate that can inform the public. Amazon’s responses, on the other hand, are defensive, impossible to verify, and show little reflection on the underlying issues the field of AI faces as a whole.

I’ve had the pleasure of working with Joy Buolamwini for the past four years as she’s become an expert in computer vision technologies and worked to understand the biases that can emerge in these systems. Buolamwimi’s aims are not to embarrass any particular company, but to get the technology community, as a whole, to acknowledge that AI systems are extremely likely to replicate existing societal biases into digital systems unless we carefully, consciously, and explicitly address these biases. Her work on the Safe Face Pledge, which urges companies take steps to mitigate the possibility that facial identification and recognition work can cause serious harm, is an important step towards helping companies recognize the potential pitfalls in this sector and act to address them. It’s deeply disappointing that Amazon would respond to these efforts by dismissing this research instead of engaging with it, learning from it, and working to improve their policies.

Image: An annotated image from IBM’s Diversity in Faces dataset for facial recognition systems. (IBM)