I recently gave a short talk at a Data Science event put on by Deloitte here in Boston. Here’s a short write up of my talk.

Data science and big data driven decisions are already baked into business culture across many fields. The technology and applications are far ahead of our reflections about intent, appropriateness, and responsibility. I want to focus on that word here, which I steal from my friends in the humanitarian field. What are our responsibilities when it comes to practicing data science? Here are a few examples of why this matters, and my recommendations for what to do about it.

http://www.slideshare.net/rahulbot/practicing-data-science-responsibly

People Think Algorithms are Neutral

I’d be surprised if you hadn’t heard about the flare-up about Facebook’s trending news feed recently. After breaking on Gizmodo if has been covered widely. I don’t want to debate the question of whether this is a “responsible” example or not. I do want to focus on what it reveals about the public’s perception of data science and technology. People got upset, because they assumed it was produced by a neutral algorithm, and this person that spoke with Gizmodo said it was biased (against conservative news outlets). The general public thinks algorithms are neutral, and this is a big problem.

Algorithms are artifacts of the cultural and social contexts of their creators and the world in which they operate. Using geographic data about population in the Boston area? Good luck separating that from the long history of redlining that created a racially segregated distribution of ownership. To be responsible we have to acknowledge and own that fact. Algorithms and data are not neutral third parties that operate outside of our world’s built-in assumptions and history.

Some Troubling Examples

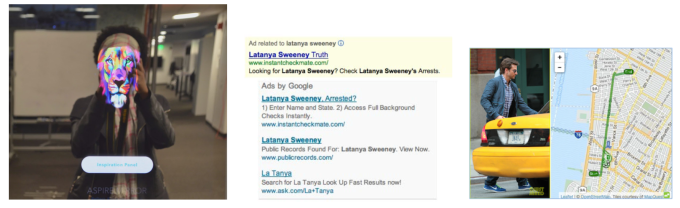

Lets flesh this out a bit more with some examples. First I look to Joy Boulamwini, a student colleague of mine in the Civic Media group at the MIT Media Lab. Joy is starting to write about “InCoding” – documenting the history of biases baked into the technologies around us, and proposing interventions to remedy them. One example is facial recognition software, which has consistently been trained on white male faces; to the point where she has to literally done a white-face mask to have the software recognize her. This just the tip of the iceberg in computer science, which has a long history of leaving out entire potential populations of users.

Another example is a classic one from Latanya Sweeney at Harvard. In 2013 She discovered a racial bias trained into the operation Google’s AdWords platform. When she searched for names that are more commonly given to African Americans (liked her own), the system popped up ads asking if the user wanted to do background checks or look for criminal records. This is an example of the algorithm reflecting built-in biases of the population using it, who believed that these names were more likely to be associated with criminal activity.

My third example comes from an open data release by the New York City taxi authority. They anonymized and then released a huge set of data about cab rides in the city. Some enterprising researchers realized that they had done a poor job of anonymizing the taxi medallion ids, and were able to de-anonymize the dataset. From there, Anthony Tockar was able to find strikingly juicy personal details about riders and their destinations.

A Pattern of Responsibility

Taking a step back form these three examples I see a useful pattern for thinking about what it means to practice data science with responsibility. You need to be responsible in your data creation, data impacts, and data use. I’ll explain each of those ideas.

Being responsible in your data collection means acknowledging the assumptions and biases baked into your data and your analysis. Too often these get thrown away while assessing the comparative performance between various models trained by a data scientist. Some examples where this has failed? Joy’s InCoding example is one of course, as is the classic Facebook “social contagion” study. A more troubling one is the poor methodology used by US NSA’s SkyNet program.

Being responsible in your data impacts means thinking about how your work will operate in the social context of its publication and use. Will the models you trained come with a disclaimer identifying the populations you weren’t able to get data from? What are secondary impacts that you can mitigate against now, before they come back to bite you? The discriminatory behavior of the Google AdWords results I mentioned earlier is one example. Another is the dynamic pricing used by the Princeton Review disproportionately effecting Asian Americans. A third are the racially correlated trends revealed in where Amazon offers same-day delivery (particularly in Boston).

Being responsible in your data use means thinking about how others could capture and use your data for their purposes, perhaps out of line with your goals and comfort zone. The de-anonymization of NYC taxi records I mentioned already is one example of this. Another is the recent harvesting and release of OKCupid dating profiles by researchers who considered it “public” data.

Leadership and Guidelines

The problem here is that we have little leadership and few guidelines for how to address these issues in responsible ways. I have yet to find an handbook for a field that scaffolds how to think about these concerns. As I’ve said, the technology is far ahead of our reflections on it together. However, that doesn’t mean that they aren’t smart people thinking about this.

In 2014 the White House brought together a team to create their report on Big Data: Seizing Opportunities, Preserving Values. The title itself reveals their acknowledgement of the threat some of these approaches have for the public good. Their recommendations include a number of things:

- extending the consumer bill of rights

- passing stronger data breach legislation

- protecting student centered data

- identifying discrimination

- revising the Electronic Communications Privacy Act

Legislations isn’t strong in this area yet (at least here in the US), but be aware that it is coming down the pipe. Your organization needs to be pro-active here, not reactive.

Just two weeks ago, the Council on Big Data, Ethics and Society released their “Perspectives” report. This amazing group of individuals was brought together to create this report by a federal NSF grant. Their recommendations span policy, pedagogy, network building, and area for future work. The include things like:

- new ethics review standards

- data-aware grant making

- case studies & curricula

- spaces to talk about this

- standards for data-sharing

These two reports are great reading to prime yourself on the latest high-level thinking coming out of more official US bodies.

So What Should We Do?

I’d synthesize all this into four recommendations for a business audience.

Define and maintain our organization’s values. Data science work shouldn’t operate in a vacuum. Your organizational goals, ethics, and values should apply to that work as well. Go back to your shared principles to decide what “responsible” data science means for you.

Do algorithmic QA (quality and assurance). In software development, the QA team is separate from the developers, and can often translate between the languages of technical development and customer needs. This model can server data science work well. Algorithmic QA can discover some of the pitfalls the creators of models might not.

Set up internal and and external review boards. It can be incredibly useful to have a central place where decisions are made about what data science work is responsible and what isn’t for your organization. We discussed models for this at a recent Stanford event I was part of.

Innovate with others in your field to create norms. This stuff is very new, and we are all trying to figure it out together. Create spaces to meet and discuss your approaches to this with others in your industry. Innovate together to stay ahead of regulation and legislation.

These four recommendations capture the fundamentals of how I think businesses need to be responding to the push to do data science in responsible ways.

This post is cross-posted on my datatherapy.org website.