Governments are putting in massive work to crack communications. Many companies are working hard to create surveillance tools used by our governments and many others. Many service providers are vulnerable to these issues. What fixes can we make as designers of technology? Morgan Marquis-Boire joined us for lunch at the Center for Civic Media to share some ideas (this post was written with Willow Brugh).

Morgan Marquis-Boire is a senior security engineer at Google specializing in incident response, forensics, and malware analysis (views expressed in this talk are not the views of his employer). For the last couple years, Morgan has focused on tracking and preventing digital attacks against high risk user groups – primarily journalists, dissidents, and activists. Increasingly, these attacks are being carried out by nation-states for espionage and surveillance purposes. Morgan studies them. Morgan also does technical analysis of surveillance tools at Citizen Lab at the University of Toronto. Recently, he has also been working with the Electronic Frontier Foundation on issues surrounding dissident suppression in Syria. Morgan is also cofounder of the Secure Domain Foundation.

Morgan starts out by clarifying the difference between targeted surveillance, which is focused on individuals, and mass surveillance across large populations.

Mass surveillance of communication networks often happens via deep packet inspection of Internet service providers and phone companies who tend to get a lot of traffic. While these technologies can sometimes be used for benign purposes, they can also be used to catalogue people’s actions onlines.

Consider the case of Narus, for example, who were alleged to send surveillance technologies to Mubarak in Egypt (link). Morgan also tells us the story of Mark Klein, who came forward in 2006 about Narus being installed at the behest of the NSA. Another example of mass surveillance is a system called X-Keystore, a system that claimed to collect “nearly anything a user does online” (email, metadata, etc). Apparently analysts can use this for ongoing interception of a user’s internet activity. According to the Washington Post, these systems store 1.7 billion emails per day. The French company Amesys is another example; their gear was found in libya and Syria.

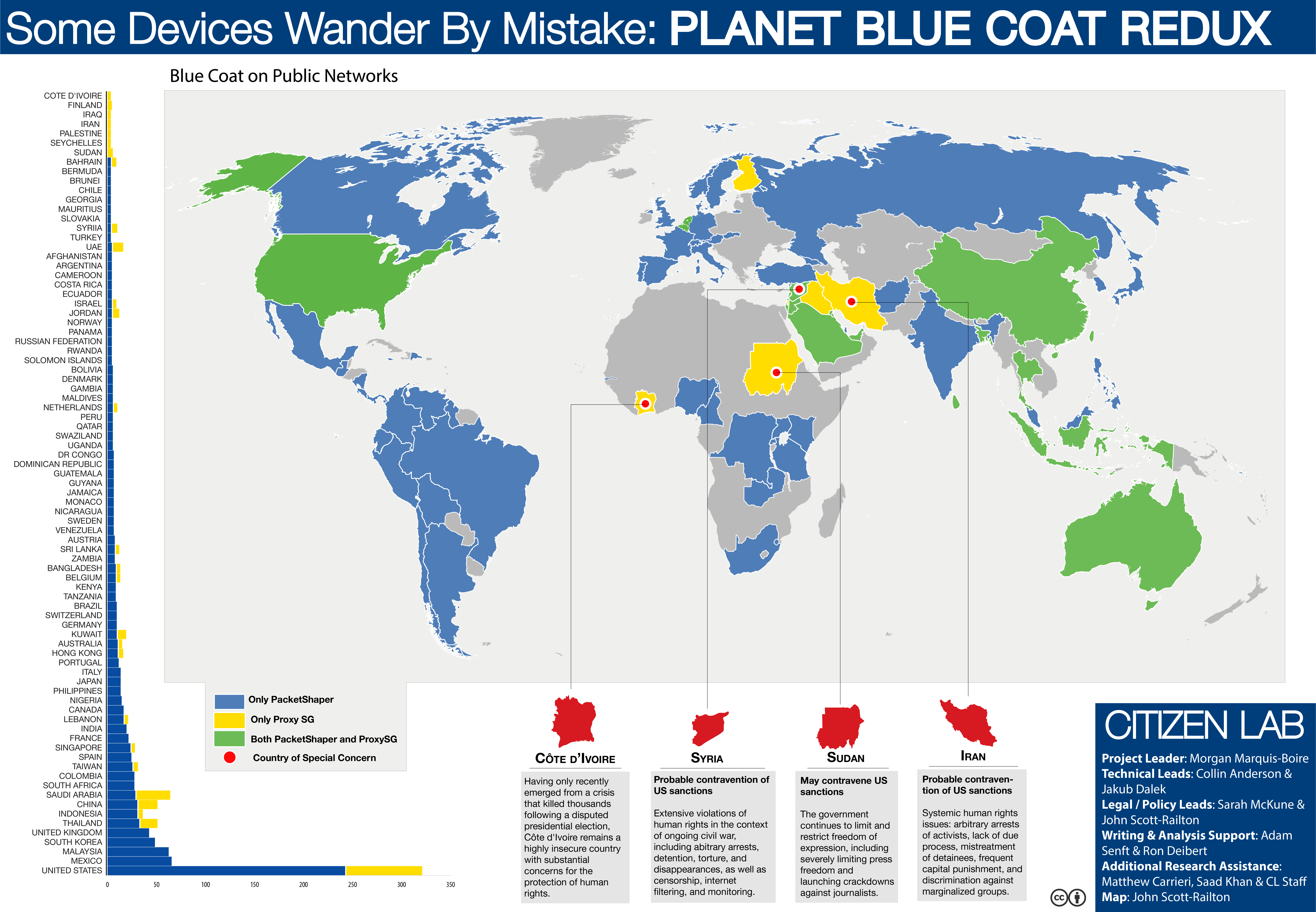

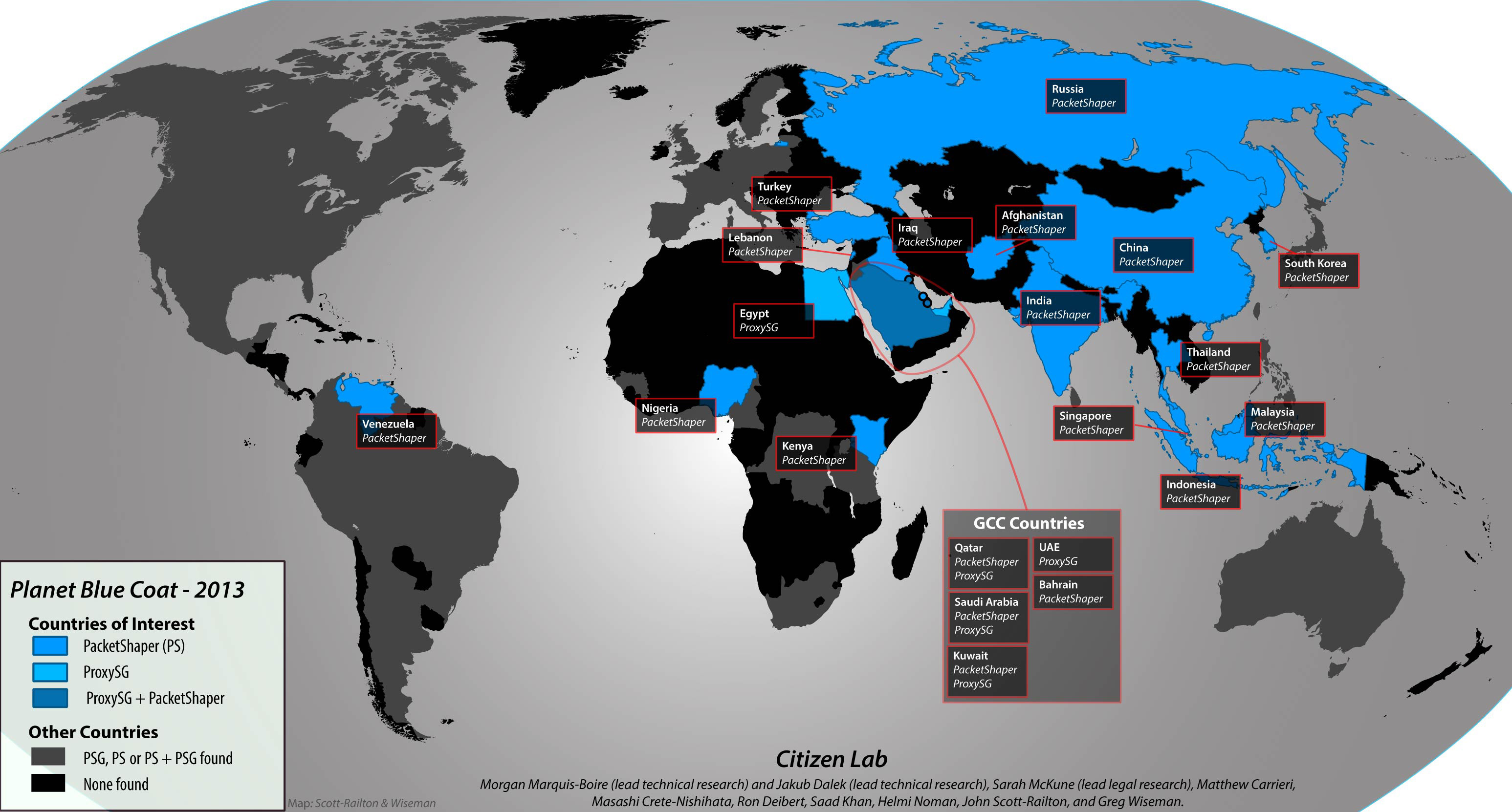

Morgan tells us the story of BlueCoat, is a silicon valley vendor whose technologies do network monitoring, realtime network awareness, and the blocking of malicious content. It can be used to monitor threats on your network, but it can also be used to block content online. In 2006, the VP of sales of BlueCoat went to a conference in Dubai and pitched it as lawful intercept technologies. Most recently, they have been offering SSL intercept capability, the kind of thing that a nation state could used to access encrypted communications. Their gear was found in Syria in 2011 ostensibly to facilitate government surveillance.

As an engineer, Morgan wanted to map out places where these devices have been found around the world. Excluding corporate and commercial networks (where it’s best practice for these companies to have draconian practices), he focused on public access networks and provider networks. This was reported by the New York Times.

Morgan followed up on data from the Internet Census to find that Blue Coat systems are being used in countries like Sudan, Iran, and Syria. This is, prima facie, illegal. The Washington Post reported on this, and an investigation is ongoing.

Morgan tells us the story of cypherpunks in the 1990s who were worried about mass surveillance, who created encryption software, fought the encryption wars, and thought they had won. Systems like Tor, whisper systems, and HTTPS are widely available. But if they won, why is it that we’re still talking about this?

The Commercialisation of Targeted Surveillance

Surveillance has changed, says Morgan. The bulk of NSA work is being done by the NSA’s Tailored Access Operations, who Forbes called “The new code-breakers,” are a team of hackers working for the US government. Another example is the People’s Liberation Army’s 2nd Bureau / 3rd Department in China. These groups try to target information from individuals by planting backdoors, track locations, and otherwise surveil individuals. The German government has been using similar trojan backdoors in criminal investigations. The Dutch government has recently proposed laws that give them the right to install malware on anyone’s computer.

What’s malware? It’s more than just the idea of a computer virus. If any of these things happen to you, it’s not an issue of just cleaning your computer. “You don’t have a malware problem, you have an adversary problem…” Morgan quotes Crowdstrike.

Morgan tells us the story of Ahmed Mansoor and the UAE Five, whose computer was infected by software called “Remote Control System” or “Da Vinci” that is sold only to governments. Exploits created by Vupen were also found on the system. The story was covered by Bloomberg.

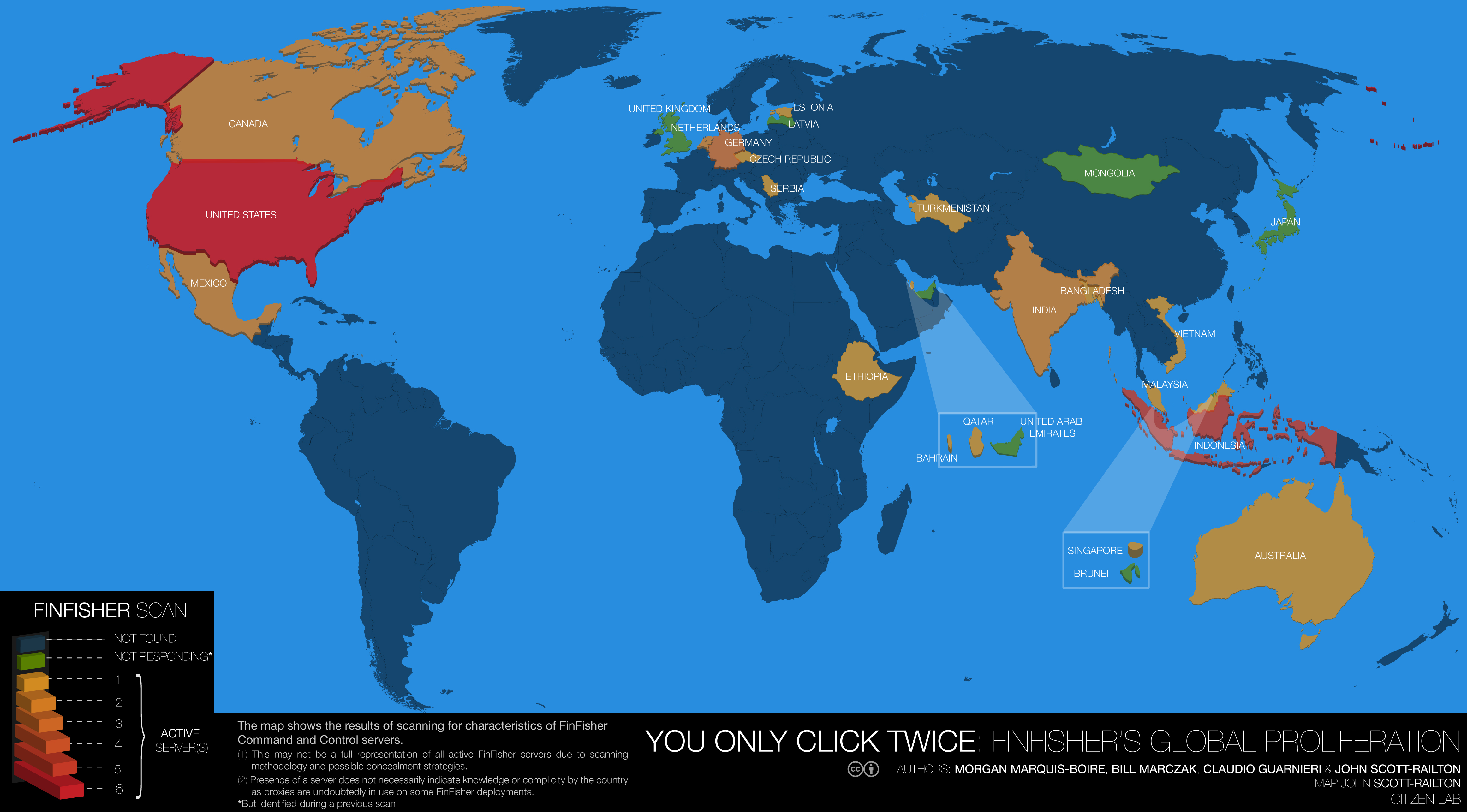

Morgan next tells us about Ala’a Shehabi, a British-born Bahraini activist and economics lecturer. On May 9, 2012, Ala’a’s husband Ghazi has been in prison since 2011. Her machine was infected by a trojan when she opened two emails that looked genuine. Shehabi found evidence of the Finfisher system in her case. Finfisher is created by a company based in Munich who claims to sell it only to certain governments and follow export restrictions. And yet it was found in Egypt and Bahrain.

These security threats are commercialised systems, targeted surveillance systems that are being sold to countries that typically lack the skills to develop them. Although it’s not clear that a server scan is definitive. However, Morgan and his colleagues traced a system in Turkmenistan to the IP range of the communications ministry.

As Citizen Lab published these materials, journalists started to cover the spread of these technologies. When the Guardian investigated the offshore accounts used by these companies to mask transactions, the UK cracked down on the sale of spyware to foreign governments.

Anti-Dissident Campaigns in Syria Morgan tells us about a series of technologies used against Syrian activists since January 2011. Skype has been used to deliver malware. Fake YouTube pages have sent malware to people. When the facebook account of a prominent academic at the Sorbonne was compromised, his account was used to spread links to malware purporting to secure people’s facebook usage. In one case, Morgan found himself clicking a video that included footage of someone whose throat is cut, then they are pushed into a pit and filmed bleeding to death.

The game is real, Morgan tells us. The targets are well studied, and the people who train them are well studied too. He shows us examples of compromised versions of legitimate surveillance circumvention software likeFreeGate. As another example, Morgan tells us about a version of the Tor browser bundle that included Tor with a special extra that compromised the anonymity of the user.

Journalists are often targeted because tomorrow’s news is today’s intel. Very few journalists speak on the record about being compromised, so Morgan can’t tell us specific details. In one case, a media giant faced an advanced persistent threat in the form of a fake survey from another media organisation. One national daily paper appeared to be compromised using commercial government-only tools that appeared to originate from their own local government. In 2012, the Moroccan citizen media site mamfakinch received an email attachment pretending to be a leak. The malware was software appeared to originate from Moroccan IP addresses (read more about this here).

Governments don’t always use surveillance; sometimes they can just force companies to give over information. Morgan tells us about the US FISA Courts, which are being used to compel companies to give up information, demands that the companies are not allowed to disclosed. Governments are going beyond requests about specific people to request that companies like Microsoft to add backdoors to their services. This summer, Lavabit was given a legal order for secret keys.

Coercion Resistant Design

Morgan suggests that “as your web service increases in popularity, the likelihood that you will eventually be forced to coerce your security model approaches 1.” If we’re engineering honesty and properly, we need to think about this issue from day one. Here are five ideas for coercion resistant design.

- Split Key Storage is used by the DNS system. The keys required to restart te DNS system requires at least five people of seven across multiple continents to restart the system. People realized that DNS was important, so they engineered it this way.

- Forward Secrecy would have helped Lavabit. In this case, each session is encrypted with a unique key, so that compromise of a single session doesn’t open up past conversations to decryption.

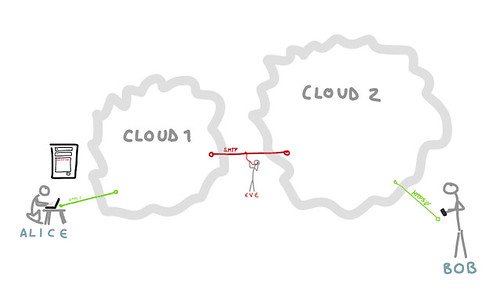

- Encryption by Default: in the late 200s, we put everything under HTTPS. We celebrated too soon. We were very concerned with the ability of people to log into their email providers security, so webmail clients are now encrypted. But the SMTP protocol is mostly not encrypted. Google does opportunistic encryption (STARTTLS), but many do not. With opportunistic encryption, the servers use encryption when both servers support it. So Morgan did an analysis of the top 20 mail domains in his inbox. Only 5 performed STARTTLS, and only 2 correctly used forward secrecy. Things are slightly better now, but this is a simple thing

- Open Source also helps us solve problems of trust because we can examine the code for security.

Engineering against the coercion of our security model is something we must do in order to protect our users. And we can do it. Don’t be privacy or security nihilists, Morgan tells us. We can design things.

Morgan tells us that if we’re making the next WhatsApp, people creating new digital tools. If the best possible thing happens to your startup, then we should bake these things into our startups.

Sasha points out that we have a once in decades opportunity to pass legislation that could change the balance on transparency. In the area of tools, the conversation has shifted back to the need to make these tools usable for lots of people. We need to encourage developers, venture capitalists, and others to find ways to create technologies that can do that.

Nathan asks Morgan about development frameworks and libraries for creating technology systems. Morgan says that he’s seen some of this done by the people who are bring in to scale the second version of systems. Nick Grossman mentions that this issue has been dead center to the venture capitalists that are funding startups to scale.

Nick asks: if the problem we have is a government surveillance problem, is investing in crypto a losing battle? Morgan thinks that this should be a process for the engineering community. This summer, he felt like “I’ve been breaking up bar fights while mass genocide was happening outside. I should just go to sleep.” But then he gets up, thinks about his skills and realizes that there are still engineering responses to be done.

Ethan and Morgan discuss the intersection between the Cute Cat Theory and Morgan’s Coercion Resistant Design theory. If, as Ethan argues, widely used platforms broaden the audience of activist media and makes them more DDoS resistant, then it’s uncomfortable to think that those widely used platforms are highly likely to be compromised. However, that isn’t an argument for using new, untested systems. They agree that one valuable approach would be to support security by design into these large services.